Putting knowledge in its place

When we talk about adding context to AI-enhanced interfaces, we’re usually focused on feeding LLMs the right information. Writing the best prompts, connecting to data stores, that kind of thing. But what about providing context to us, the human users?

Let's look at the typical back-and-forth with a chatbot: you ask something, it responds, and so on. Say you’ve heard about a fun new concept called condensation and you want to learn more about it.

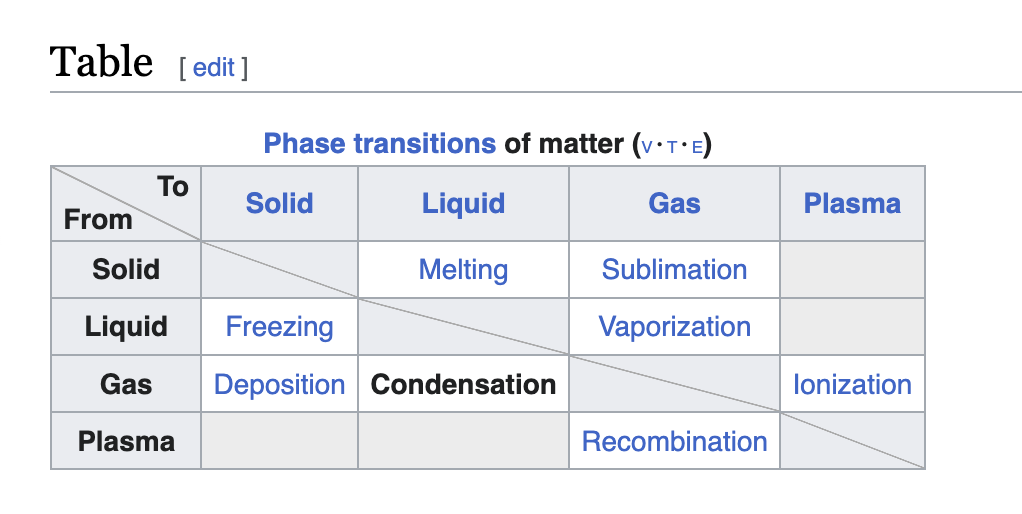

This is a great answer! We've learned what condensation is, when it happens, and even got an example. What would be wonderful, though, is showing common frameworks that this knowledge is usually presented with. If we look at the Wikipedia entry for condensation, we see this lovely table:

Looking at this table, I can quickly see what the phases are, what the other transitions are called, and even that the transition between phases is different depending on its direction. And on top of that, I can very easily continue down the rabbit hole by clicking on any of the links in the table to learn more about other phase transitions. Whereas asking follow-up questions to a chatbot can feel like searching a dark room by pointing a flashlight and turning it on for a second at a time.

Note that I'm not necessarily saying that the Wikipedia entry is better than the chatbot response. But it would be helpful to have some of the surrounding context for the chatbot response that isn't strictly information about condensation.

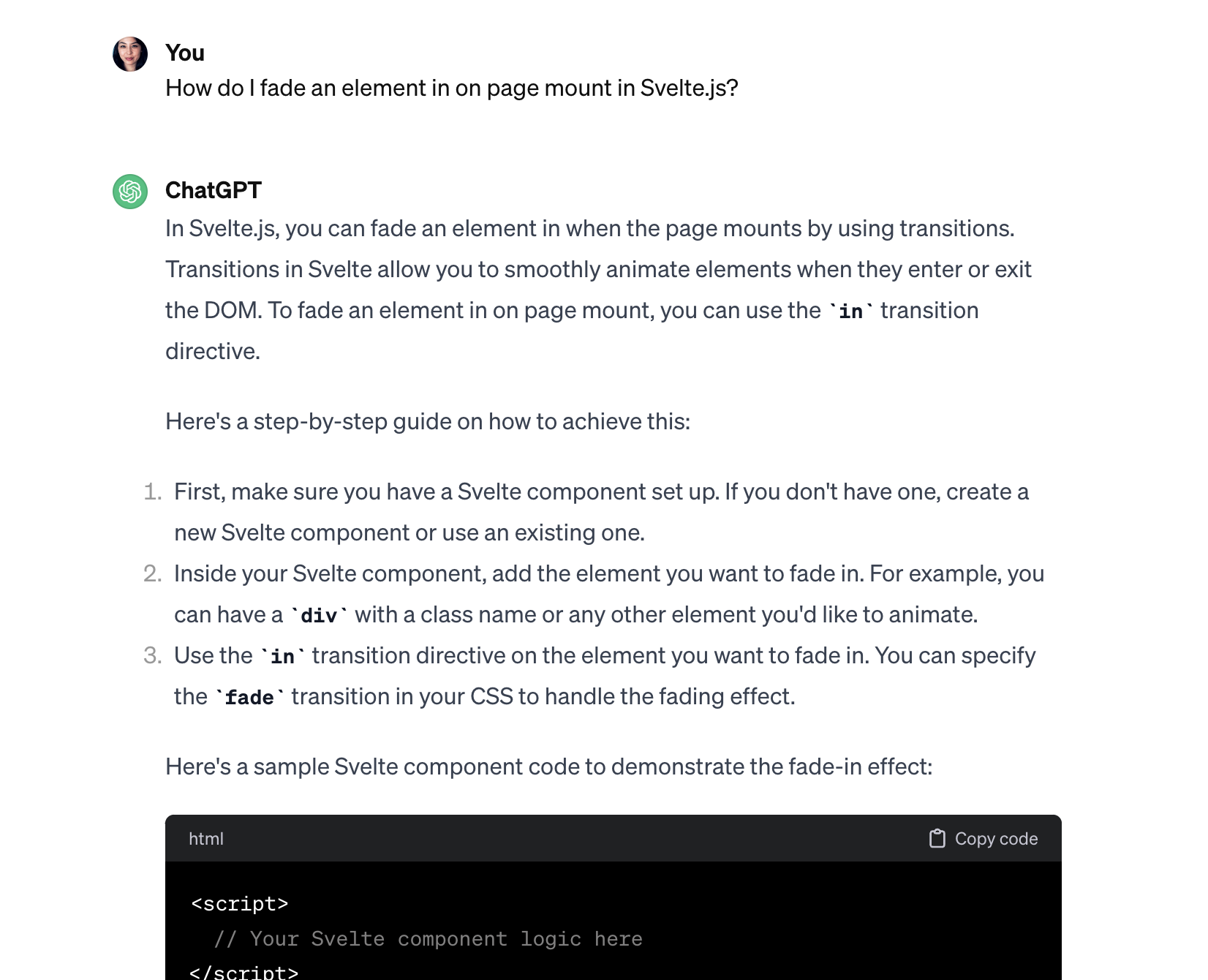

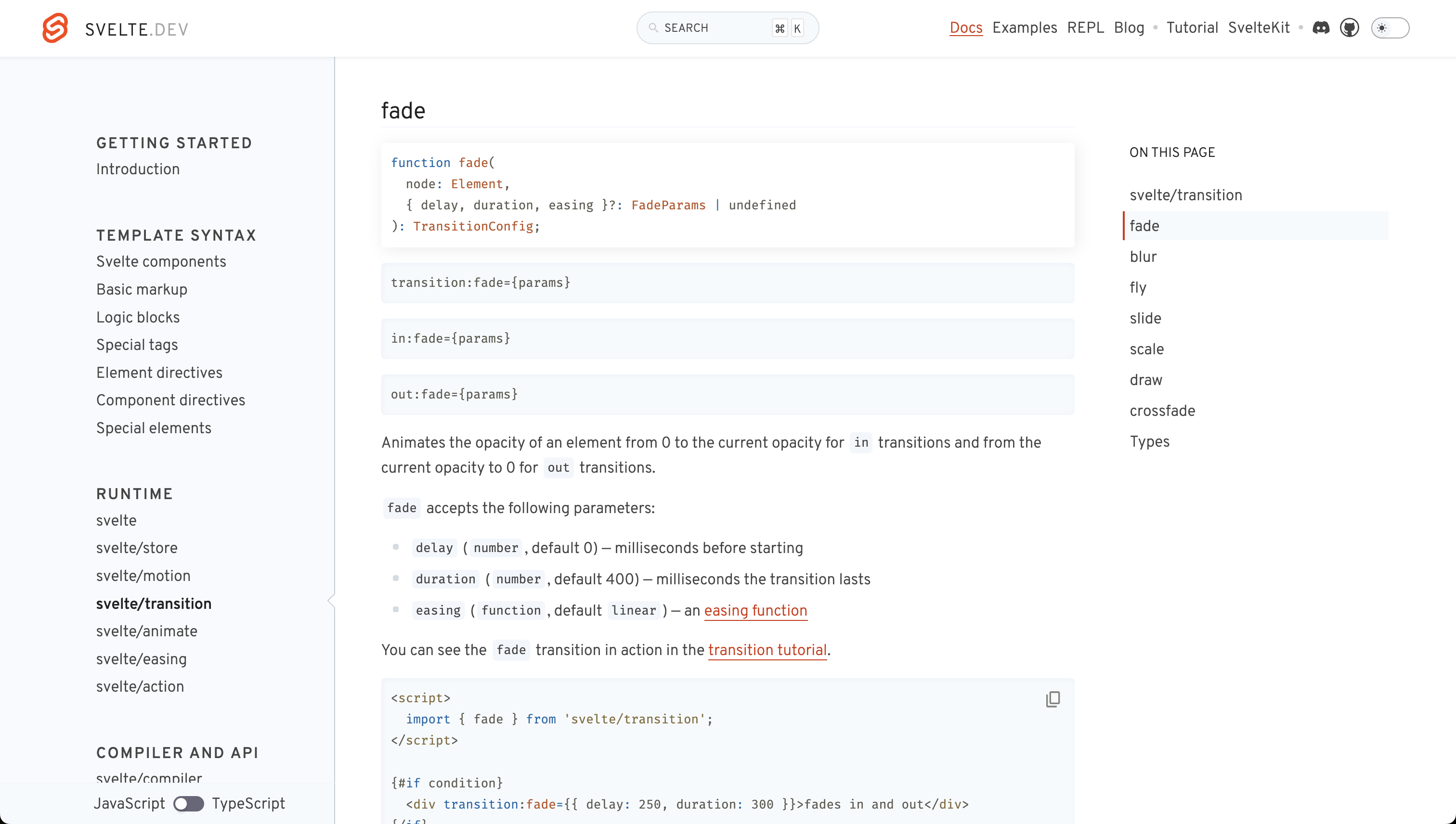

Here's another example that sticks out to me: let's say I'm building a website with the Svelte framework and I want an image to fade in. I can ask my friend ChatGPT for help:

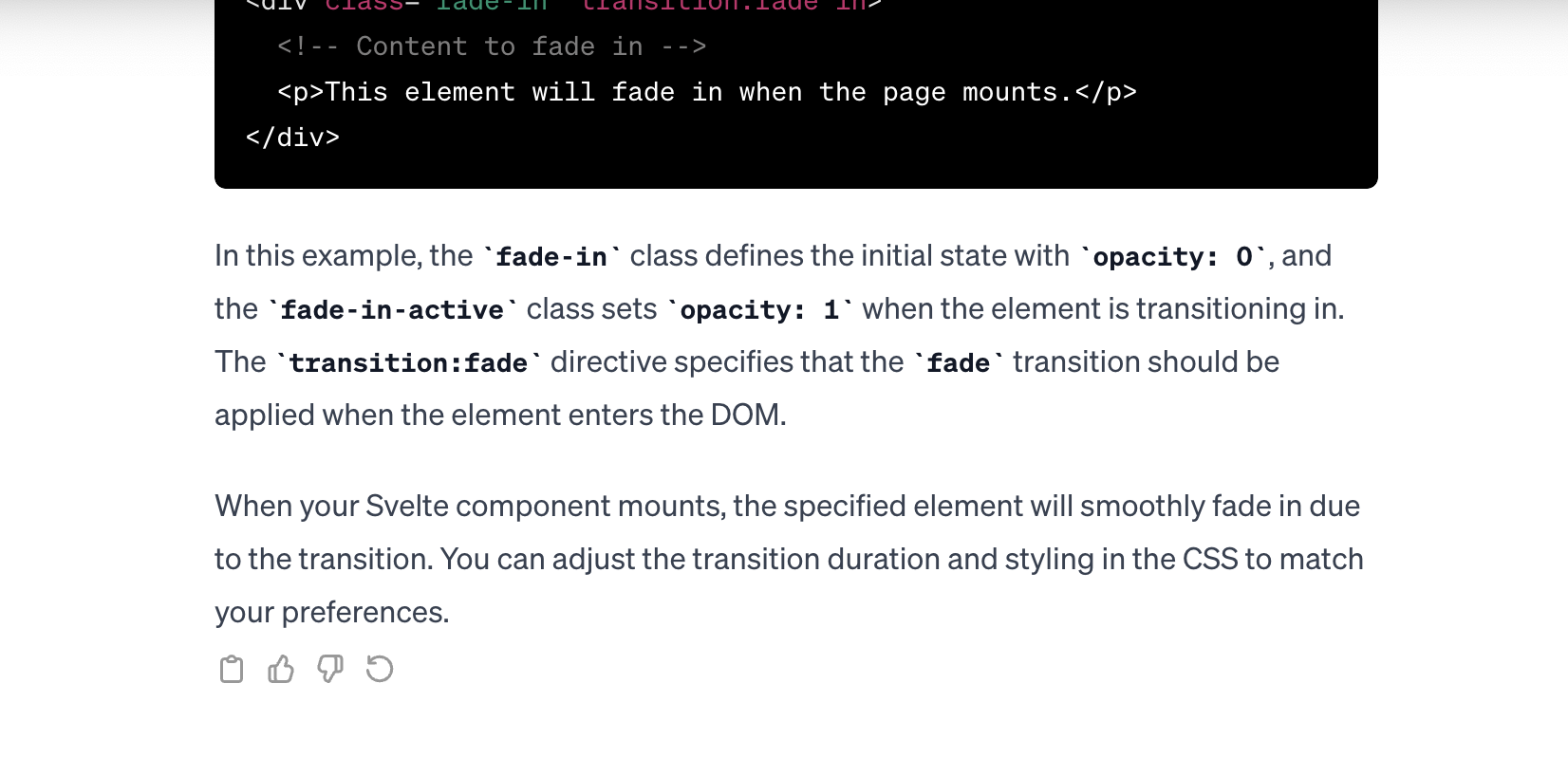

This answer is perfectly helpful and can get me on my way. But looking at the Svelte documentation gives me a great idea of other types of transitions, without making me explicitly look for them:

And zooming out a bit, I can see other important Svelte concepts.

I might see that there's also a svelte/animate module and learn about that for future reference. Unknown unknowns are hard to stumble on when you're only given the information you explicitly ask for.

It feels important that these two examples (the phase change table and the Svelte documentation topics) show structured data that isn't presented as raw text. This gives room for related concept that feel more lightweight: easy to ignore or scan quickly.

What about outside of chat?

The same gap pops up in many demos of task-oriented AI applications. For example: ordering a pizza. You ask for a pizza, and presto, "Pizza ordered."

But often I want to know what kinds of pizza places are available or the range of pizza prices. Is this pizza the best deal, the highest rated, or maybe some combination of the two? Showing where the chosen pizza falls in a distribution of price or ratings would go a long way to making me feel oriented.

This is a silly example, but I would love to see as much context baked into LLM-generated responses as possible.

Join me, for a minute, in the ✨ AI-free zone ✨.

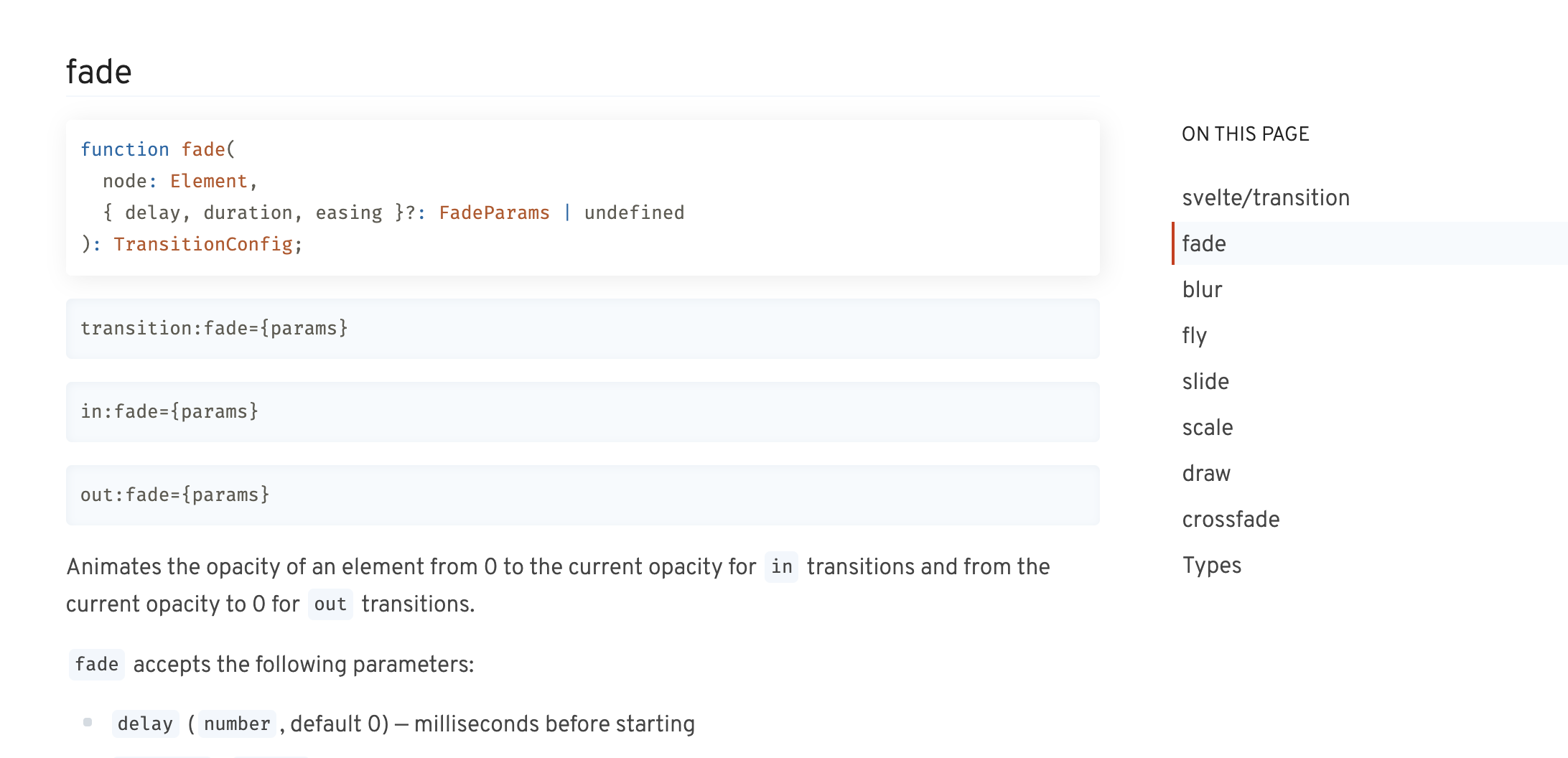

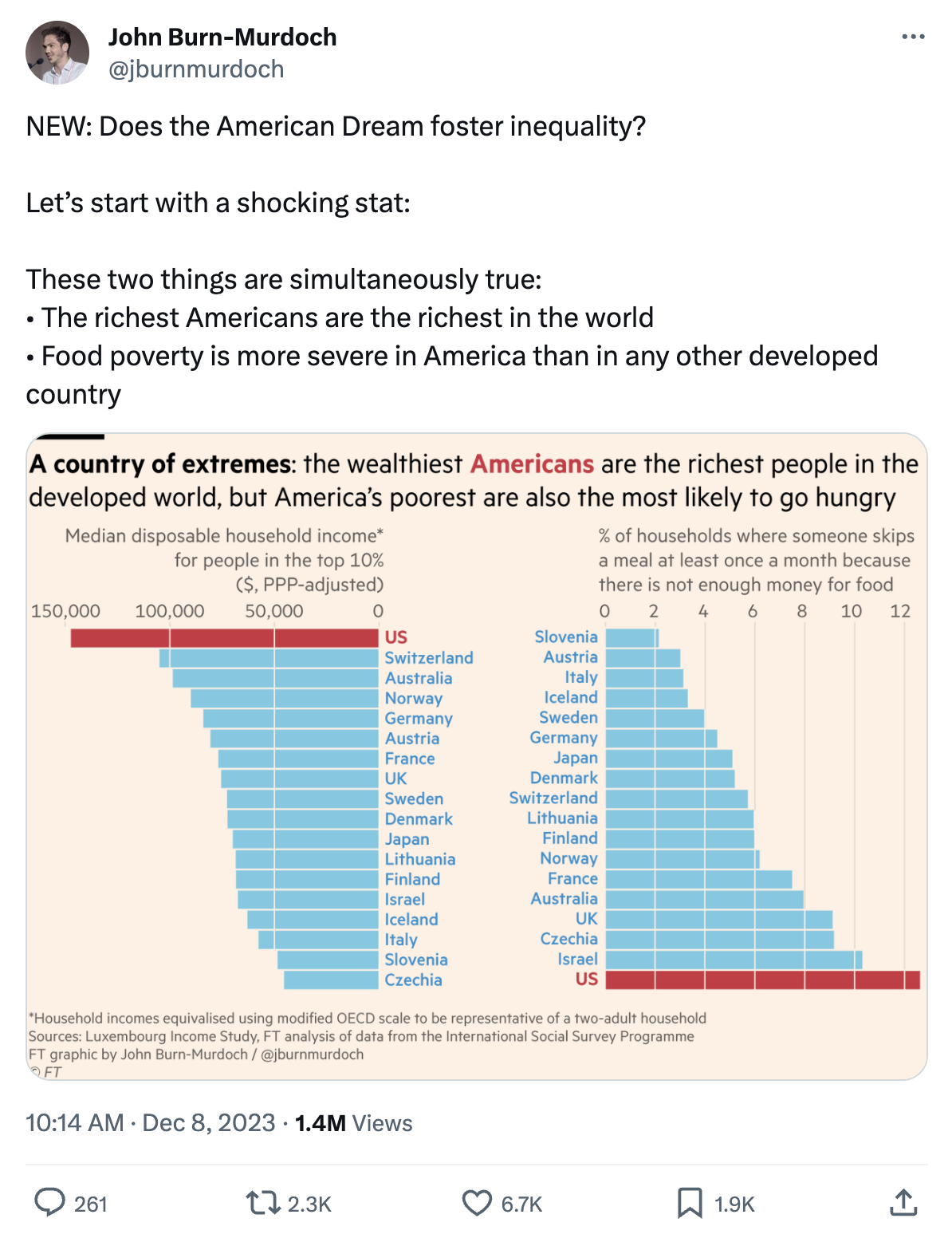

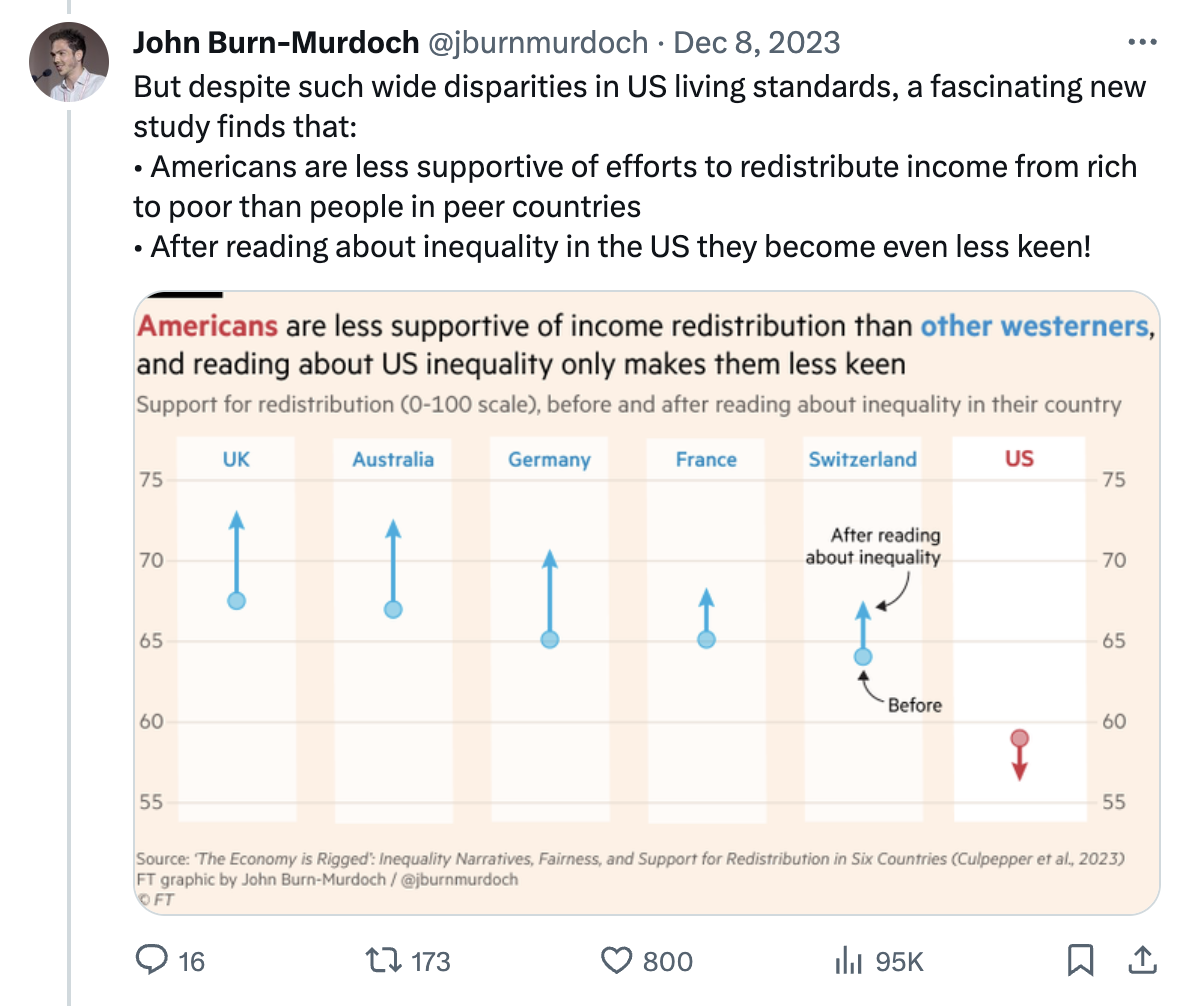

John Burn-Murdoch is a wonderful reporter at the Financial Times who writes these hugely interesting Tweet threads walking through a concept, backing up points with basic charts. Let's take this thread on American inequality as an example:

He starts with two facts: "The richest Americans are the richest in the world" and "Food poverty is more severe in America than in any other developed country". But a tweet with just those two facts would fall quite flat. Backing up these points with charts really paint a fuller picture. For example, I can quickly see that Switzerland is the next richest country, although the US has almost 50% more median disposable household income for the top 10%. And there are only 15 other countries that make even one third of what the US does.

The Tweet thread goes on, building a very rich picture just using simple charts.

Honestly, wanting to document the beauty of JBM's tweets is a large reason why I wanted to write these thoughts down. It's a great illustration of how filling in some of the surrounding, not-explicitly-related context can really make a difference in understanding.

I often find myself drawing parallels between these AI interactions and data visualization, emphasizing the need to fill in the blanks with more context. Adding context isn’t just about making things fancy — it’s about meeting the growing expectations of users in this AI-driven era.

So, where do we go from here? We need solutions. Imagine interfaces that understand what you need before you ask. It’s about presenting information in a way that makes sense right off the bat. But there are challenges to tackle, like privacy and not overwhelming users with too much info.

Okay, back to talking about AI.

The point I'm trying to make here is really just a small one: couching AI-generated responses in larger frameworks and related context leads to much richer, stronger understanding. Whether it's adding structured data to chatbot responses, showing related concepts, or showing a visualization of the larger picture, adding context goes a long way to orient the user.