Getting creative with embeddings

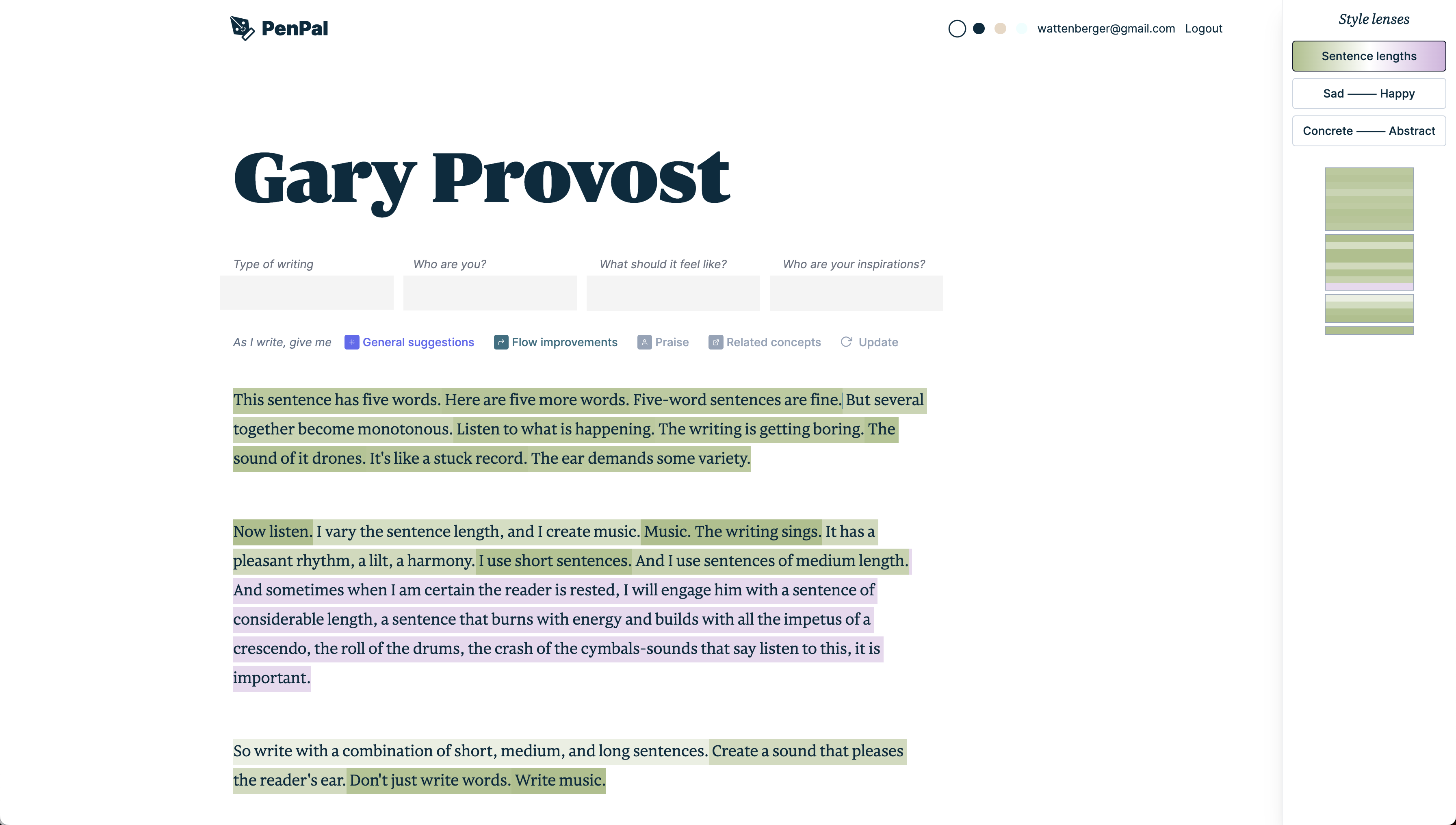

In defiance of the “AI will write everything for us” narrative, I’ve been noodling on a writing app, PenPal, focused on the opposite: making us better writers.

My process has involved a lot of research into how great writing instructors work. There’s one concept I find especially appealing: making the implicit explicit. Much of the work of becoming a better writer doesn’t involve writing, but instead taking a magnifying glass to others’ writing. Can PenPal make it easy to see Hemingway’s style, to compare Stephen King to John Steinbeck, or even to compare your writing style to Roald Dahl’s?

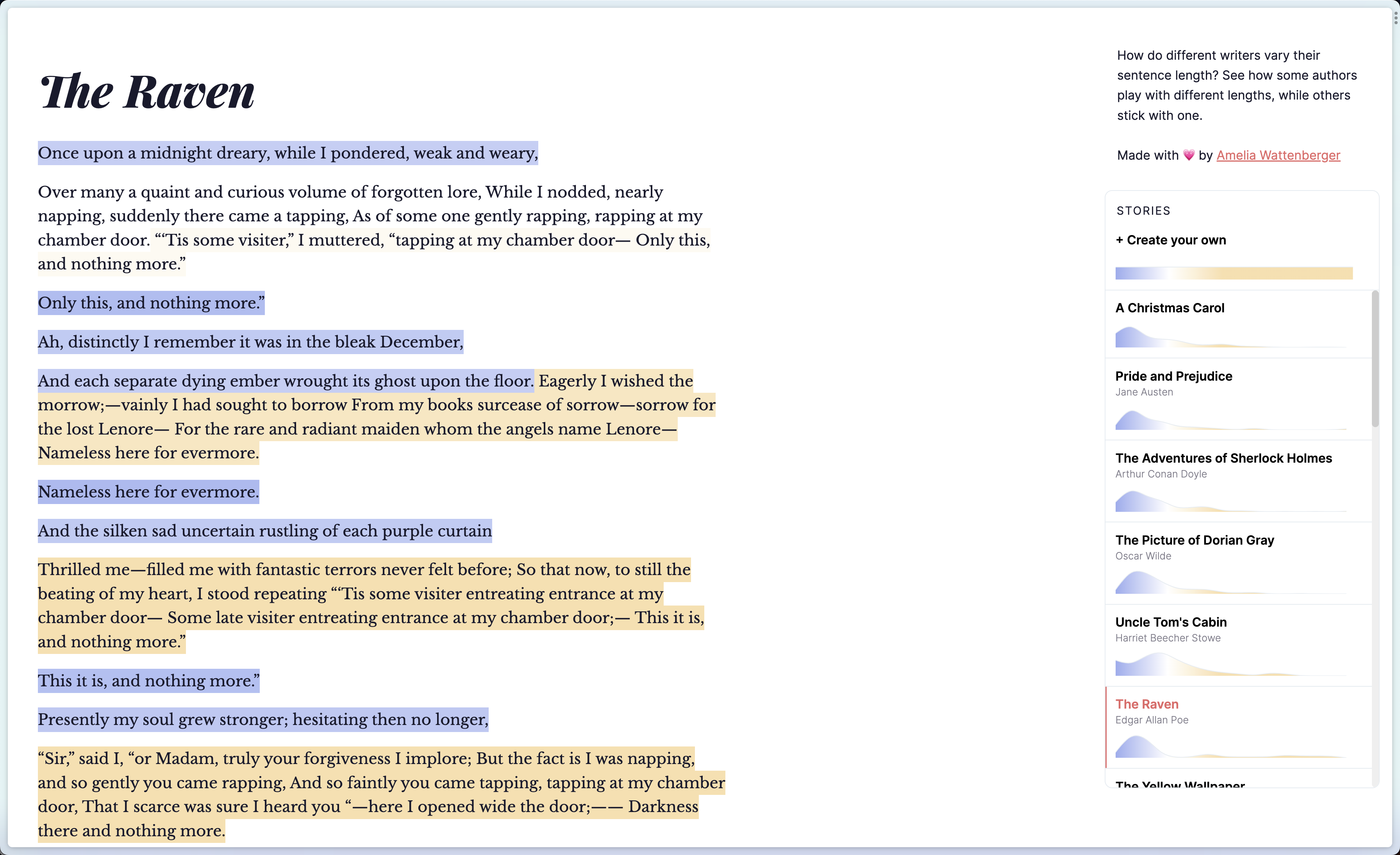

This actually isn’t a completely new idea for me. After seeing a popular example of the power of diverse sentence lengths, I create an app that highlights each sentence based on its length.

This was an easy add! Just loop over every sentence and map the character count to a color scale.

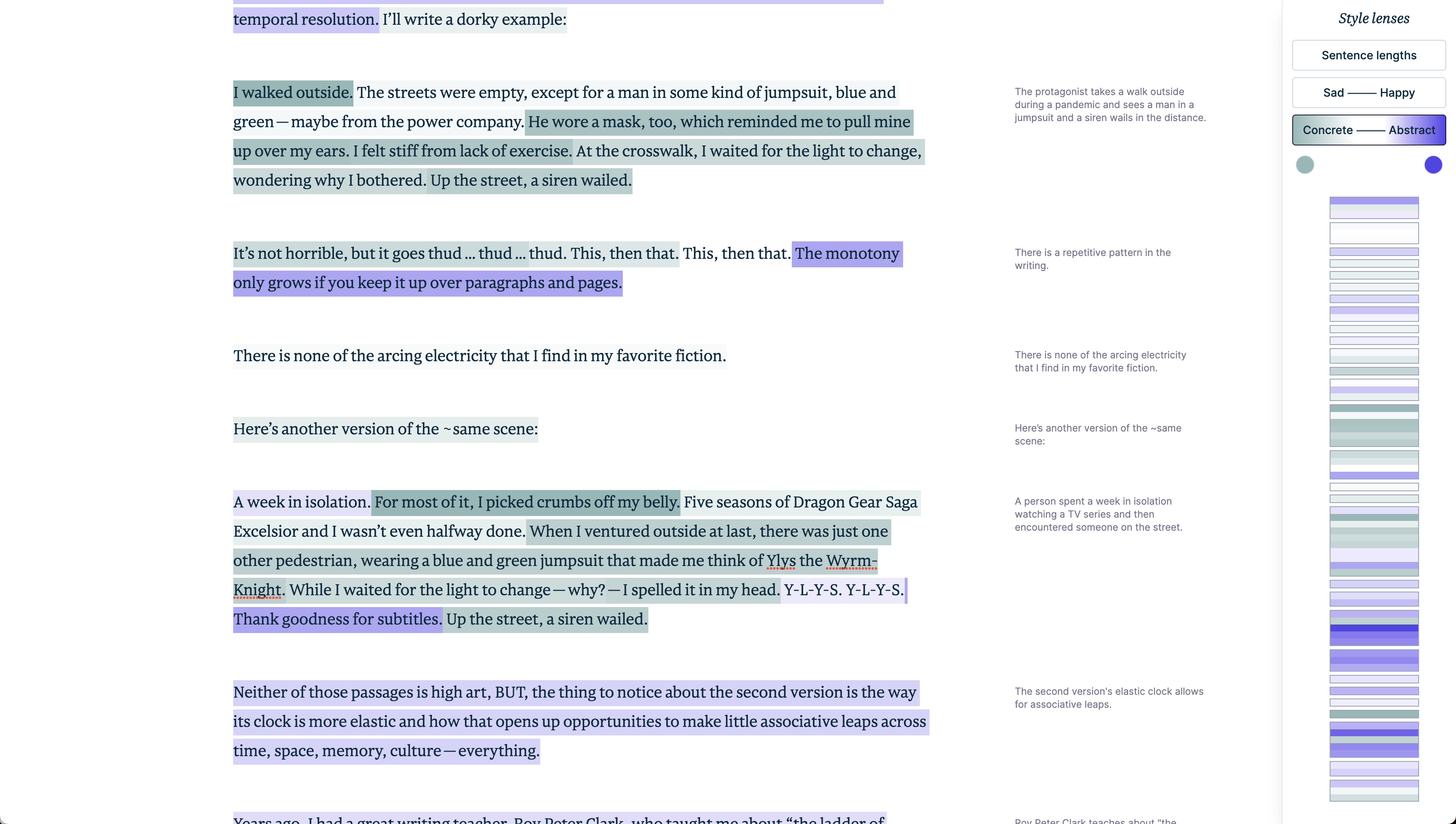

But I remembered an essay from Robin Sloan I had squirreled away in my notes a few years ago: Week 4, ladder of abstraction. He talks about “the ladder of abstraction”, introduced by Roy Peter Clark in his book “Writing Tools”:

Good writers move up and down a ladder of language. At the bottom are bloody knives and rosary beads, wedding rings and baseball cards. At the top are words that reach for a higher meaning, words like freedom and literacy. Beware of the middle, the rungs of the ladder where bureaucracy and technocracy lurk. Halfway up, teachers are referred to as full-time equivalents and school lessons are called instructional units.

What a wonderful concept! By making it easy to scan the "abstractness" of your writing, we can better identify the "middle rungs" or long stretches on either side of the ladder.

So, how do I get the “abstractness” of each sentence in a user’s writing? Using a more traditional method with an “abstractness level” for every word and averaging across a sentence would surely miss lots of nuance. So, can we use AI?

A naive method might be to put on our Prompt Engineer hat and ask GPT “how abstract is this sentence, on a scale from 1 - 10”. But there isn’t any rigor to that scale – no anchors for any specific number. Plus, how will GPT define “abstract” or concrete. And double plus, we all know GPT isn’t good at math.

Another option would be to create or fine-tune a custom model. This is tempting, but the end scale would be expensive, slow to create, and hard to update.

Finally, we get to why I wanted to write this piece - something I haven’t seen before but might just work. Since we have access to OpenAI’s embeddings model, we can convert a word or phrase to a specific location in embedding space. Given a few samples of the “extreme” ends of our scale, we can average those into a specific location and map any new sample's location onto the vector between the two.

There are many benefits to this method:

- the embeddings model is relatively cheap: as of August 8th, 2023, 1,000 tokens costs just $0.0001, compared to $0.03 for GPT-4

- the scale is easy to create: just a few examples of the “extreme” ends

- the returned number is interpretable: a sentence with a score of 0.5 is halfway between the two examples

Here’s how it looks in action:

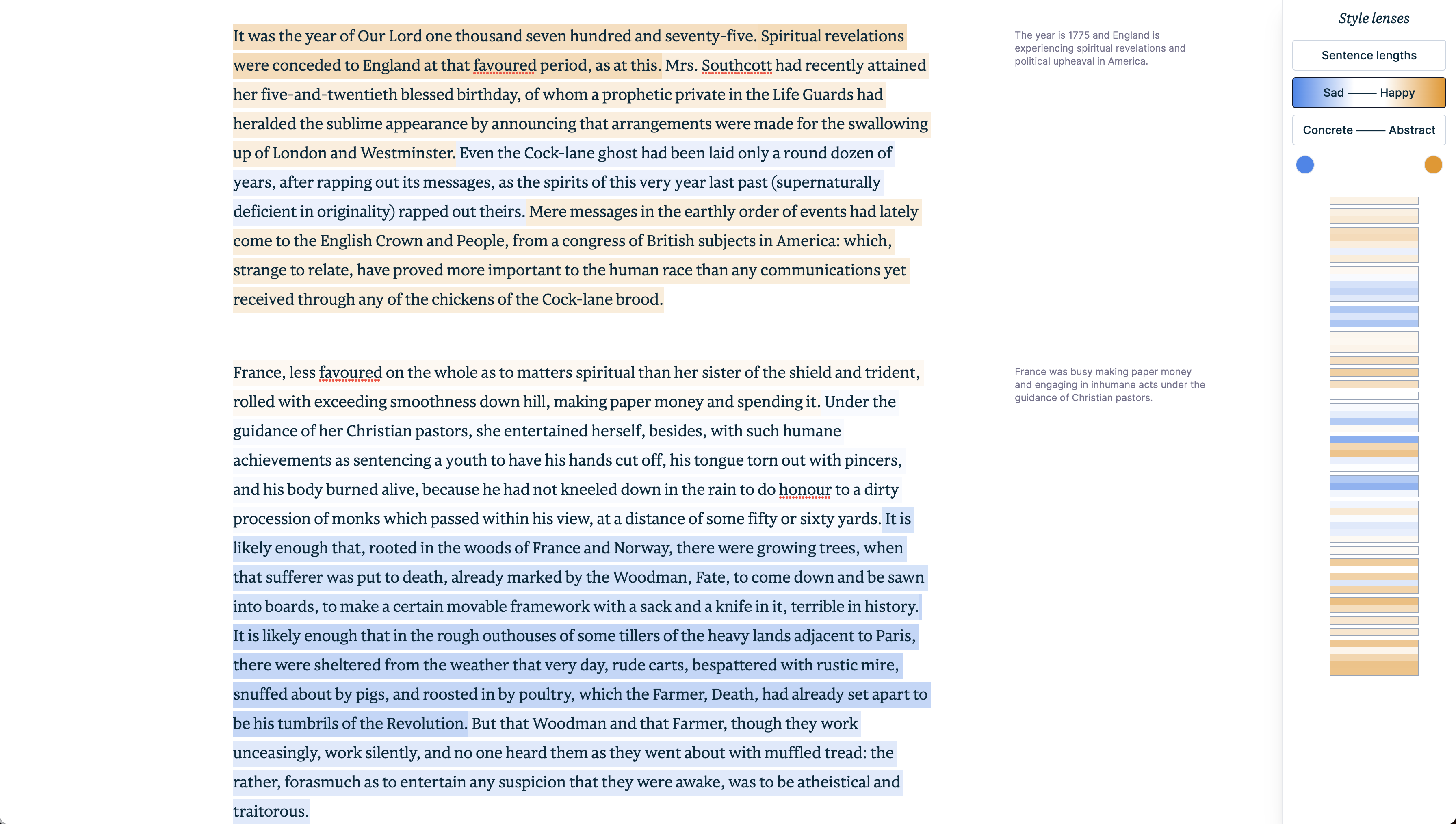

One of the benefits I’m most excited about is the ability for users to easily create their own custom scales. For example, a sentiment scale would involve a few “sad” words for the negative end and a few “happy” words for the positive end.

Of course, this method isn’t perfect. It will work much better for some types of scales than others. It also might be biased by sentence length. But after some initial experimentation, I found that both a Concrete – Abstract and Sad – Happy scale work quite well. I’ll report back when I’ve played around more, and I would love any thoughts from you on Twitter!